I am a PhD student at Beihang University, supervised by Prof. Xianglong Liu. I am currently focused on the practical application of large AIGC models such as LLM and Diffusion, including inference acceleration and model quantization. I hope that advanced technological methods can truly bring convenience to people’s lives.

📝 Publications

2026

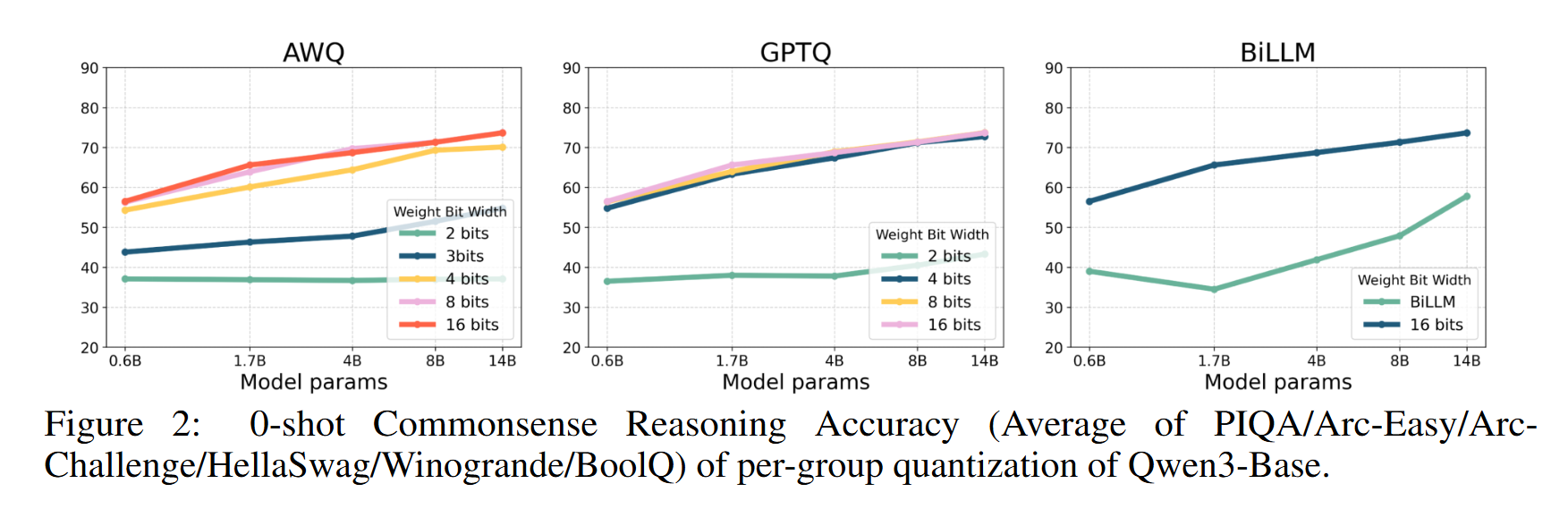

An Empirical Study of Qwen3 Quantization

Xingyu Zheng, Yuye Li, Haoran Chu, Yue Feng, Xudong Ma, Jie Luo, Jinyang Guo, Haotong Qin$^{\dagger}$, Michele Magno, Xianglong Liu

- This paper explores Qwen3’s capabilities when quantized to low bit-width, demonstrating its value in advancing future models.

2025

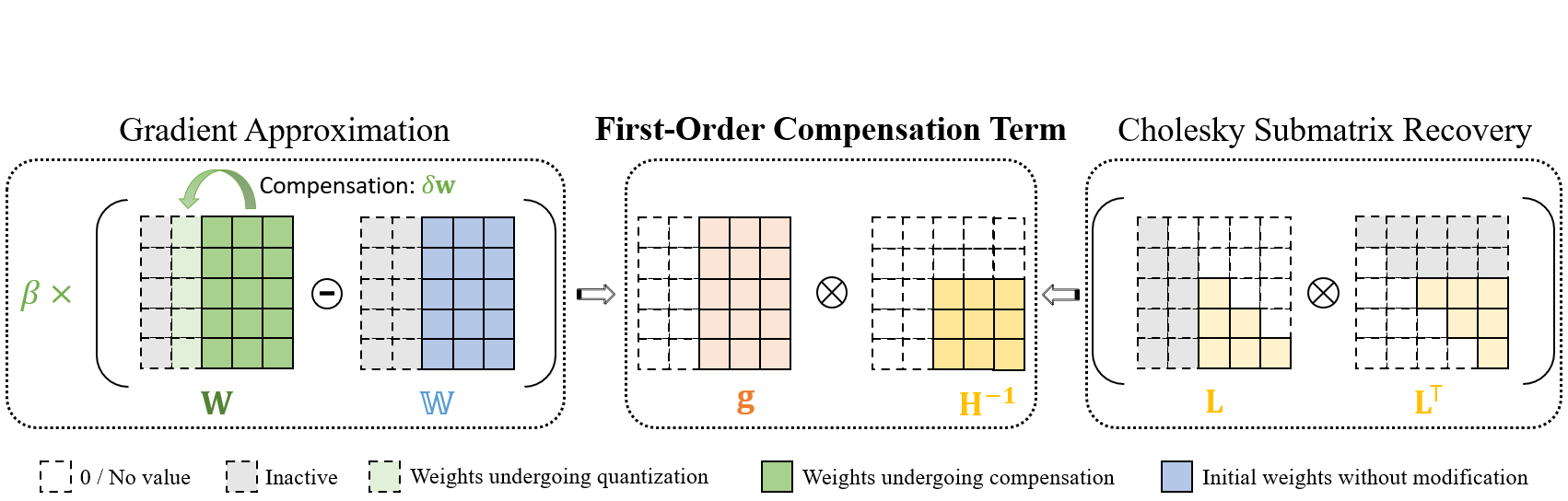

First-Order Error Matters: Accurate Compensation for Quantized Large Language Models

Xingyu Zheng*, Haotong Qin*, Yuye Li, Haoran Chu, Jiakai Wang, Jinyang Guo, Michele Magno, Xianglong Liu$^{\dagger}$

- This paper proposes FOEM, a novel PTQ method for LLM that explicitly incorporates first-order gradient terms to improve quantization error compensation.

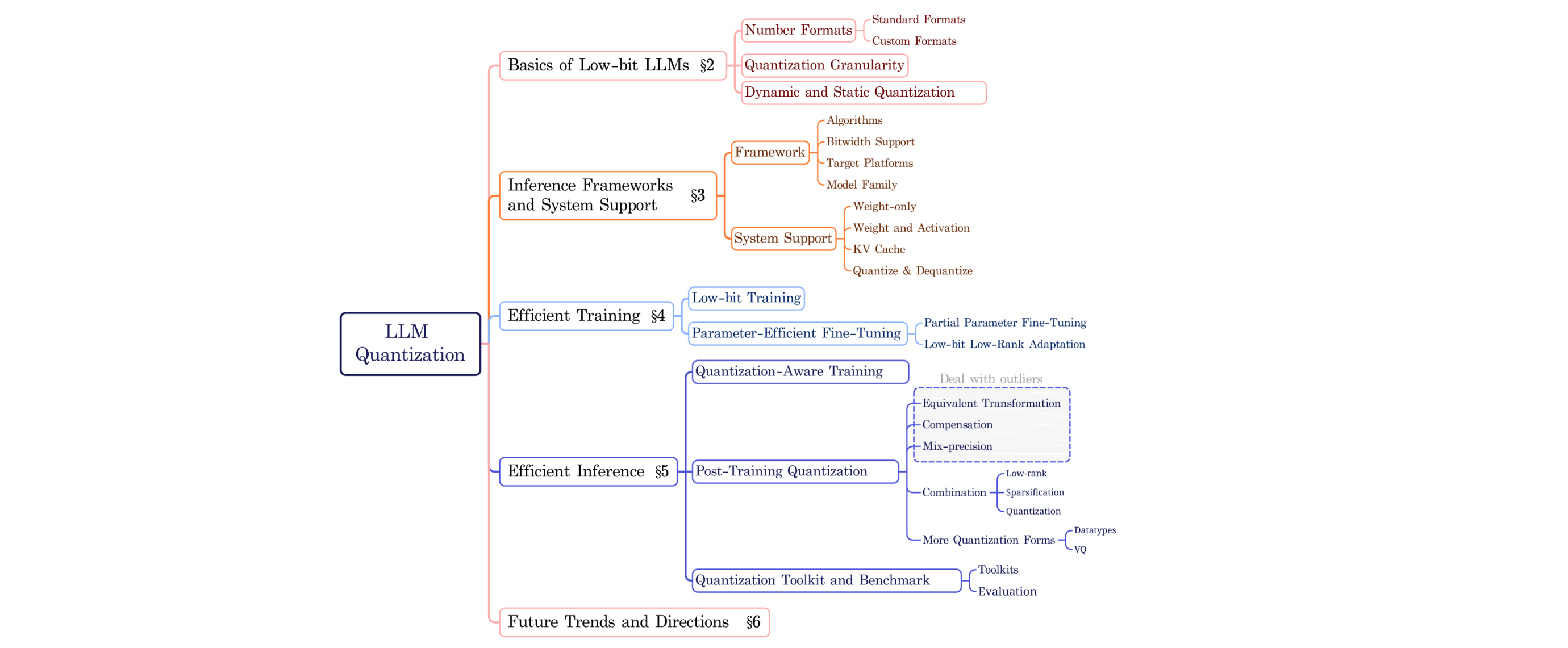

A Survey of Low-bit Large Language Models: Basics, Systems, and Algorithms

Ruihao Gong, Yifu Ding, Zining Wang, Chengtao Lv, Xingyu Zheng, Jinyang Du, Haotong Qin, Jinyang Guo, Michele Magno, Xianglong Liu$^{\dagger}$

- This paper presents a comprehensive survey of low-bit quantization methods tailored for LLMs, covering the fundamental principles, system implementations, and algorithmic strategies.

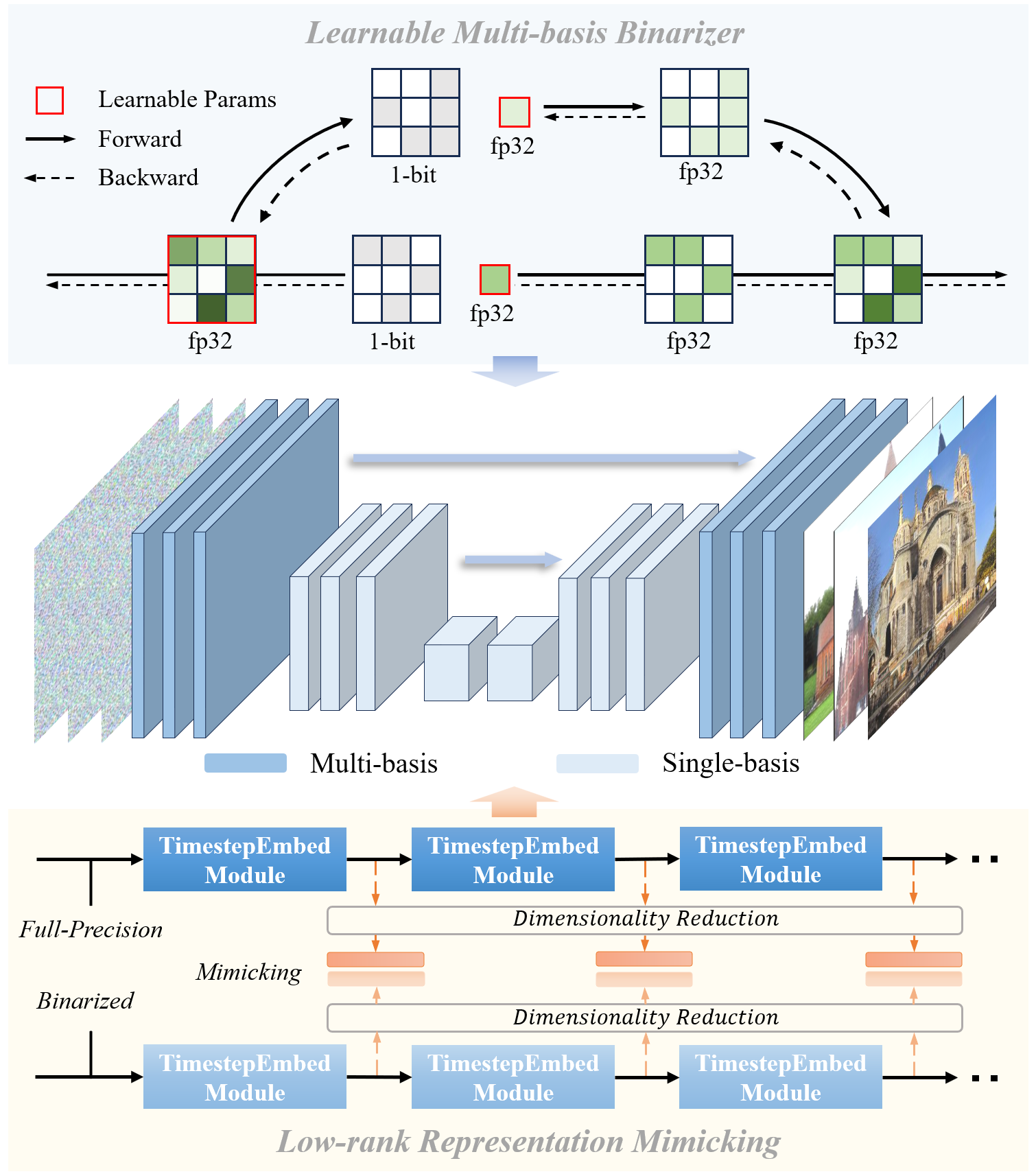

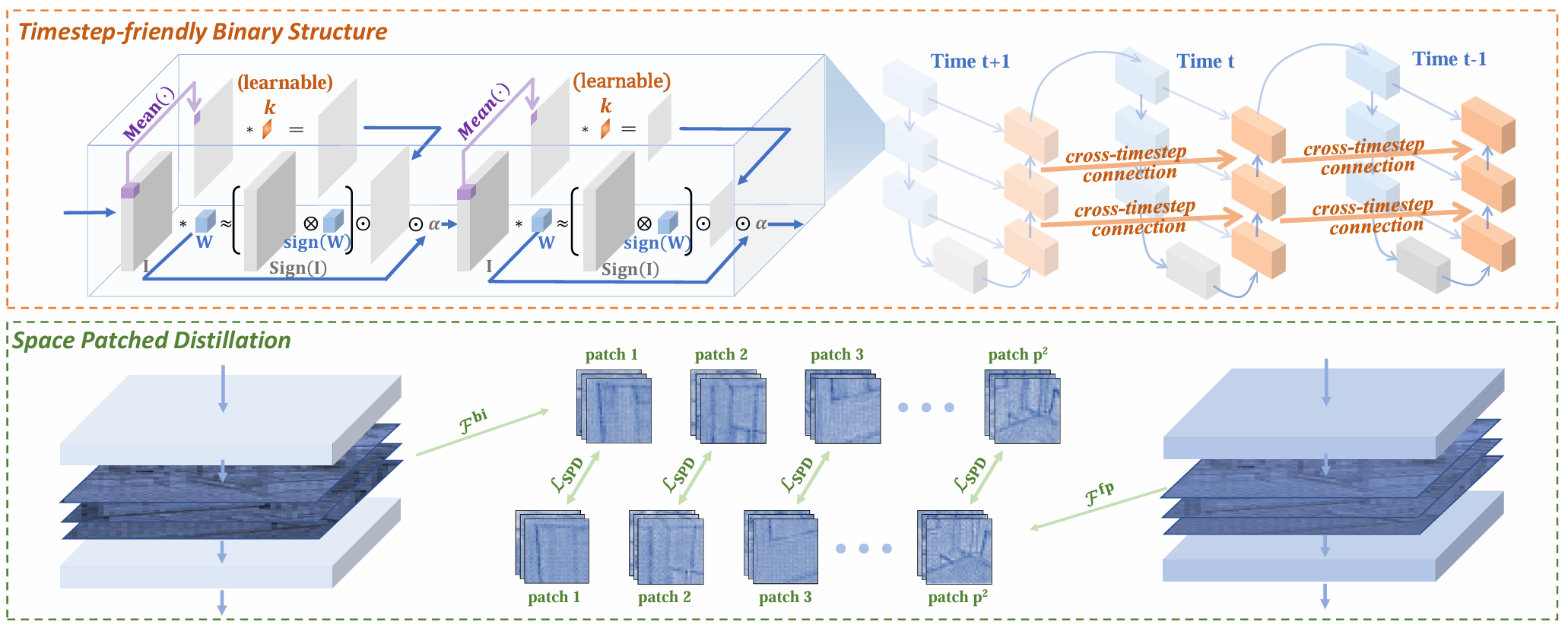

BinaryDM: Accurate Weight Binarization for Efficient Diffusion Models

Xingyu Zheng, Xianglong Liu$^{\dagger}$, Haotong Qin, Xudong Ma, Mingyuan Zhang, Haojie Hao, Jiakai Wang, Zixiang Zhao, Jinyang Guo, Michele Magno

- This paper proposes BinaryDM, a novel accurate quantization-aware training approach to push the weights of diffusion models towards the limit of 1-bit.

2024

BiDM: Pushing the Limit of Quantization for Diffusion Models

Xingyu Zheng, Xianglong Liu$^{\dagger}$, Yichen Bian, Xudong Ma, Yulun Zhang, Jiakai Wang, Jinyang Guo, Haotong Qin

- This paper proposes a novel method, namely BiDM, for fully binarizing weights and activations of DMs, pushing quantization to the 1-bit limit.

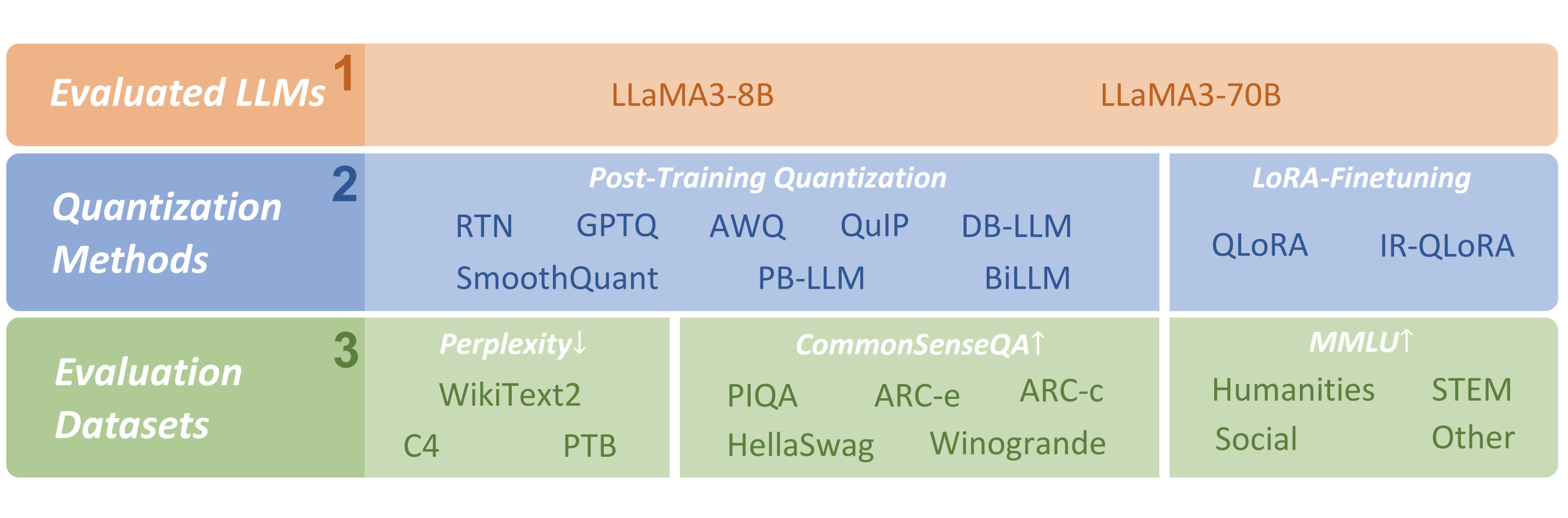

An Empirical Study of LLaMA3 Quantization: From LLMs to MLLMs

Wei Huang*, Xingyu Zheng*, Xudong Ma*, Haotong Qin$^{\dagger}$, Chengtao Lv, Hong Chen, Jie Luo, Xiaojuan Qi, Xianglong Liu, Michele Magno

- This paper explores LLaMA3’s capabilities when quantized to low bit-width, demonstrating its value in advancing future models.

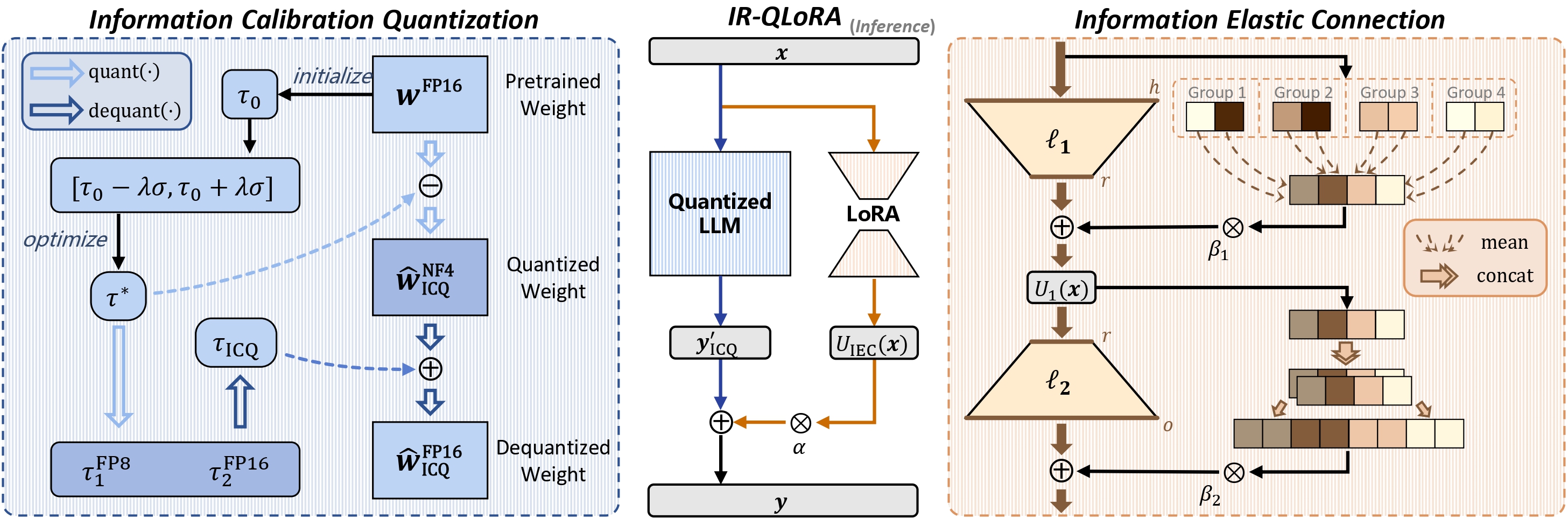

Accurate LoRA-Finetuning Quantization of LLMs via Information Retention

Haotong Qin*, Xudong Ma*, Xingyu Zheng, Xiaoyang Li, Yang Zhang, Shouda Liu, Jie Luo, Xianglong Liu$^{\dagger}$, Michele Magno

- This paper proposes a novel IR-QLoRA for pushing quantized LLMs with LoRA to be highly accurate through information retention.

2023

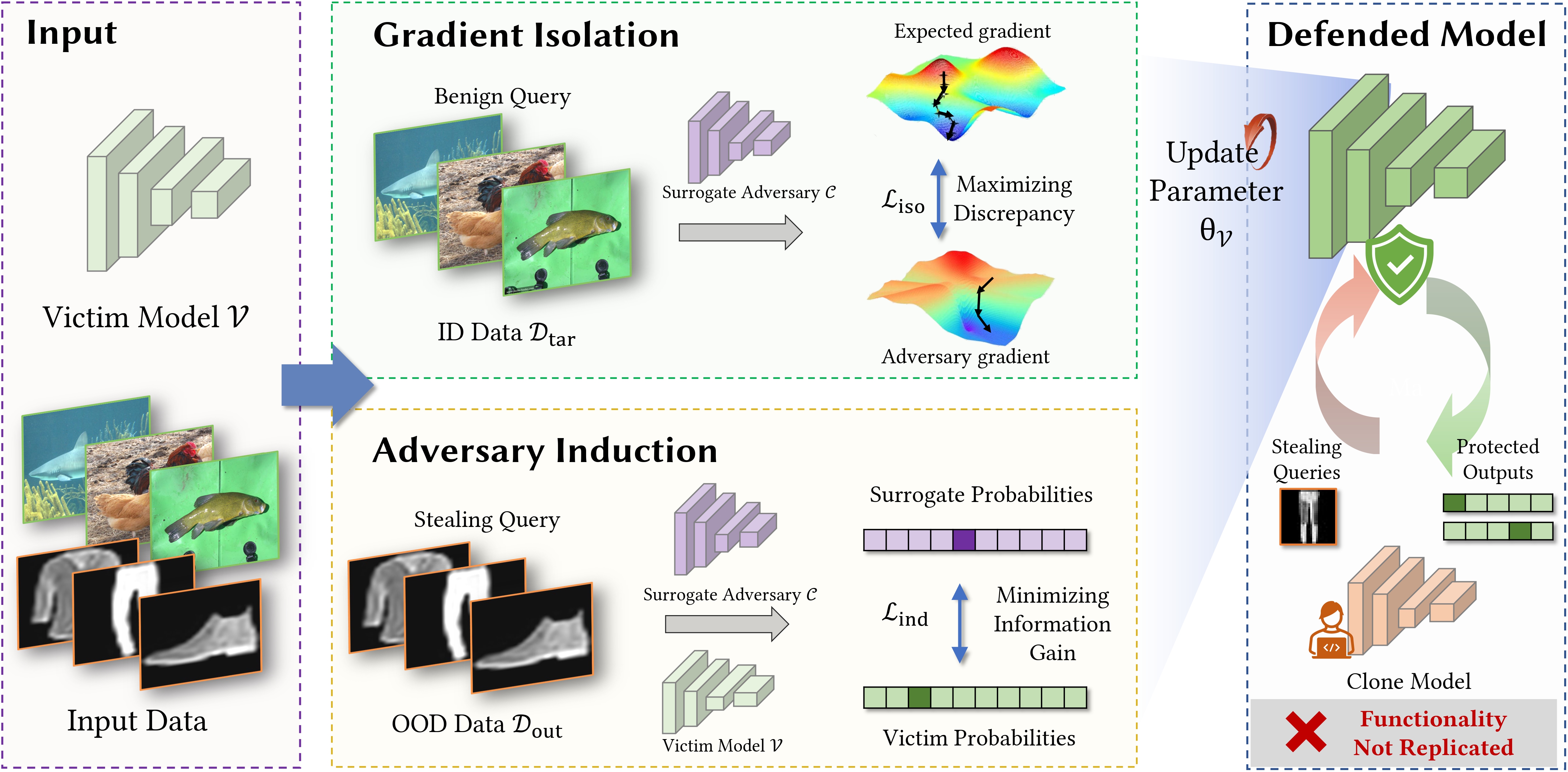

DIsolation and Induction: Training Robust Deep Neural Networks against Model Stealing Attacks

Jun Guo, Xingyu Zheng, Aishan Liu$^{\dagger}$, Siyuan Liang, Yisong Xiao, Yichao Wu, Xianglong Liu

- This paper proposes Isolation and Induction (InI), a novel and effective training framework for model stealing defenses.

Book

Generalizing from Limited Resources in the Open World

Jinyang Guo, Yuqing Ma, Yifu Ding, Ruihao Gong, Xingyu Zheng, Changyi He, Yantao Lu, Xianglong Liu

- This book presents the Proceedings from the Second International Workshop GLOW 2024 held in conjunction with the International Joint Conference on Artificial Intelligence, IJCAI 2024, in Jeju Island, South Korea, in August 2024.

🎖 Honors

- 2025, Tencent Rhino-Bird Elite Training Program.

- 2024, Honor Student of Beihang University.

- 2024, Excellent Undergraduate Graduation Thesis.

- 2024, Excellent Graduate of Beihang University.

- 2022, Lanqiao Cup, National First prize.

📖 Educations

- 2024 - now, PhD student, Beihang University.

- 2020 - 2024, Undergrad student, Beihang University.

💬 Academic Activities

- 2025.08, Organizer, Practical-DL 2025.

- 2024.08, Publicity Chair, GLOW 2024.

💻 Internships

- 2025.08 - now, Tencent, Shanghai.